Over the last few years I have performed many SQL Server Data Warehouse Database Performance Tuning Sessions with a variety of customers in many different environments. This sessions usually consist of a weeklong engagement in which I lead an in-depth software (SQL Server) and hardware tuning classes. The results of which are put into a highly detailed roadmap and list recommendations for improved SQL Server Data Warehouse performance.

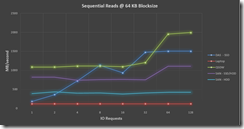

As part of this testing, I use SQLIO to benchmark the hardware performance capabilities of the SQL Server Data Warehouse database server that I am testing (see: Data Warehouse Storage Throughput (MB/sec) ). There are of course many other types of tests and analyses that I preform as well as part of this week long process but I am going to stick with SQLIO for now.

Since I have performed these tests many times I would like to share some of the results that I have seen from various customer SQL Server Data Warehouse server environments.

Note: Even though these are actual aggregate figures from actual customers, given the complexity of each environment, your results may vary. This is meant to give you an idea what kind of performance to expect.

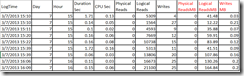

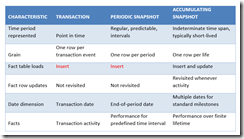

QSDW: QuickStart Data Warehouse 1000, This is a Dell Microsoft SQL Data Warehouse Appliance that is built to Microsoft FastTrack Reference Architecture Best Practices such as parallelism.

DAS – SSD: Direct Attach Storage with Solid State Drives. SSDs not architected for Data Warehouse specific workloads.

SAN – SSD/HDD: Tier 1 SAN that uses a combination of Solid State Drives (SSD) and Hard Disk Drives (HDD). Not architected for Data Warehouse specific workloads.

SAN – HDD: Tier 2 SAN that uses only Hard Disks Drives (HDD). Not architected for Data Warehouse specific workloads.

Laptop: My personal Laptop with one 7.2 K HDD

As can be determined from the graph the Dell QuickStart Data Warehouse out preforms even Solid

State Devices and both types of SANs. This due primarily to the fact that the QSDW is specifically tuned to data warehouse workloads which are typically characterized by sequential read/writes in block sizes greater than 64KB. In fact had I increased the block sizes for the tests, the gaps in performance would have increased.

Conclusion:

Ensuring that the Data Warehouse server is specifically tuned for Data Warehouse workloads can have a dramatic impact on performance. In fact this tuning can even be more import than the type of storage technology selected e.g. SAN, SSD, or HDD.

Note: The above graph shows server hardware performance not SQL Server Database Engine performance. The tool SQLIO has nothing to do with SQL Server even though the name might suggest otherwise